August 17, 2008

The paper is A review of imaging techniques for systems biology (BMC Systems Biology 2008, 2:74) . Pixcavator is listed in “Table 2 - Overview of microscopy image analysis software” along with a few other companies/products. All the usual suspects are here: Image-Pro from Media Cybernetics, ImageJ, CellProfiler, Clemex Vision. The rest are less familiar and some of the companies are mostly about hardware. The paper itself is about imaging methods not image analysis. Even though this is not a endorsement by any stretch of imagination, it’s nice to be mentioned. (Smart people will also notice a few products that aren’t mentioned.) BMC stands for BioMed Central.

May 27, 2008

TinEye is an image-to-image search engine from Idée. It is in a closed testing but I got to try it a couple of days ago. After a very positive review at TechCrunch, I decided to write up my impressions (a review of an earlier version is here).

They don’t make wild claims about being able to do face identification or similar (unsolved) problems. The goal seems very simple: find copies of images. With this task TinEye does a fairly good job. It finds even ones that have been modified - noise, color, stretch, crop, some photoshopping. It does not do well with rotation. That’s a major drawback (compare to Lincoln from MS Research).

These are the images that I tried.

Barbara: found both color and bw copies and a slightly cropped version.

Marilyn: found cropped and stretched versions, and an even edited (defaced) version.

Lenna: found both color and bw, but not partial or rotated versions (even though a rotated version is in the index).

May 7, 2008

After Google “launched” its ImageRank - by presenting a paper about it, now there are two more.

First, Idée “publicly launched” its image search engine (report here). If you want to try it, they’ll put you on a waiting list. How is it different from what we saw before?

Second, “Pixsta launches image search engine” (report here). Testing is also closed. What is the difference from what we saw before?

The only good thing here is that I discovered a better term for visual image search, CBIR, etc. It’s “image-to-image search“, as opposed to text-to-text and text-to-image we are familiar with.

May 2, 2008

I read this press release a few weeks ago. Just like many others it presents some over-optimistic report of a new method that is supposed to solve a problem. Just like many others it’s about face recognition. For a change I decided to read the paper the report is based on and write up my thoughts.

First, the paper itself is much more modest that the press release. That’s very common. Let’s look closer.

The traditional approach to face identification is to look for distinctive features – eyes, nose, mouth - and then match them with those of the other image or images. Here approach is to take everything in the image, every “feature”. First, let’s make this clear: when they say “features” they mean simply pixels! I have no idea why… They also don’t emphasize the obvious consequence – the method should work with any images not just faces.

This language of “features” obscures a common and straightforward approach to data representation and pattern recognition, as follows. Suppose you have a collection of 100×100 images. Then you rearrange the rows of this 100×100 “matrix” into a 10,000-vector. As a result, each image is represented as a point in the 10,000-dimensional space. This is clearly a brute force approach. However, something like that is inevitable if you don’t have an insight into the nature of the problem. Once all the data is in a Euclidean space (no matter how large), all statistical, data processing and pattern recognition methods can be used. Nice! The most common method is probably clustering – looking for groups of points unusually close to each other.

I have always felt OK about this approach but this time I started to doubt its applicability in analysis of images.

First you notice is that this approach can only work as long as all images have the same dimensions. It gets trickier if you study images of different dimensions. For example, if you had both 30×20 images and 1×600 images in the collection, that would really mess up everything! In a less extreme case, the presence of 30×20 and 20×30 images in the collection would be a problem. Of course you can simply add extra blank pixels up to 30×30 as a “common denominator”. However, it appeared to me that such a problem (and such an awkward solution) may be an indication of bigger issues with the whole approach.

I asked myself, does this approach preserve the structural information contained in the image? The very first thing to look at is the adjacency of pixels. Since each pixel corresponds to an independent dimension, it seems that the adjacency is still contained in those coordinates: (a,b,…) is not the same as (b,a,…). Wrong!

It suffices to look at the distance between points – images - in this 10,000-dimensional space. It can be defined in a number of ways, but as long as it is symmetric we have a problem. Suppose the distance between (1,0,…,0) and (0,1,0,…,0) is d. Then the distance between (1,0,…,0) and (0,0,…,0,1) is also d. Here (1,0,…,0) and (0,1,0,…,0) are two images with a single pixel in each – located adjacent to each other - while (0,0,…,0,1) has a pixel in the opposite corner! The result is odd and you have to ask yourself, can clustering be meaningful here?

More to come…

April 29, 2008

A paper appeared recently on how to improve Google search. It has received a lot of media coverage including NY Times and TechCrunch. Since this is a topic that interests me a lot, I decided to write a few words.

The most important thing to understand here is that the paper isn’t about improving image search in general (especially visual image search and CBIR, see here). It is specifically about Google image search (and indirectly other search engines, MSN, Yahoo, etc). The goal is to improve it (because it sucks). It is currently based on surrounding text and as a result you get a lot of irrelevant images. Essentially, they add to this approach some image analysis. What kind? Not the best kind – “descriptors”. So there will be no analysis of the content of the image (see Fields related to computer vision). Even so, the descriptors will help to evaluate similarity between images - to a certain degree.

To summarize, some similarity measure plus hyperlinks - that will help with improving the search results for sure. Meanwhile, image search, image recognition etc remain unsolved.

April 26, 2008

I was reading this interview with Donald Knuth and “literate programming” was mentioned. I went to their site and this is what explained to me what it’s about:

Instead of writing code containing documentation, the literate programmer writes documentation containing code.

Suddenly I realized: that’s it! That’s what I’ve been trying to do in the wiki. It should be text illustrated with code not vice versa. Interesting…

March 30, 2008

A new post at TechCrunch just appeared: Image Recognition Problem Finally Solved: Let’s Pay People To Tag Photos. A new company apparently provides image recognition for photo tagging - but only with human help! That’s not surprising to me. What is interesting is the change of attitude at TechCrunch: “A trail of failed startups have tried to tackle the problem… Google has effectively thrown in the towel…” After so many enthusiastic articles about image recognition technology somebody finally saw the light. And so did the founder of Riya. For a much longer list of “failed startups” in this area try this article about visual image search engines.

P.S. When I tried to reply to their post with a two-sentence comment, it was rejected. How odd!

P.S.S. The TechCrunch post was about TagCow, now I see a very recent post (elsewhere) about Picollator. They claim they have a visual image search engine for faces. People will keep trying….

March 28, 2008

Here I finish (part 1 and part 2) my short review of Quantitative Biological Image Analysis by Erik Meijering and Gert van Cappellen.

The last two items on the list of fields are the following.

Computer Graphics: numbers in -> image out. Instead of numbers one could have math functions that produce numerical descriptions of images. These descriptions are likely to be different from those in computer vision: vector vs. raster images (the difference is in fact superficial from the point of view of cell decomposition). It’s also “the inverse of image analysis”. That would seem to imply that if you use Image Analysis followed by Computer Graphics you’ll end up with the original image. That would make sense only if the data produced by image analysis does not go very deep (not image segmentation or Fourier transform etc). I think that Computer Graphics is simply irrelevant for Computer Vision.

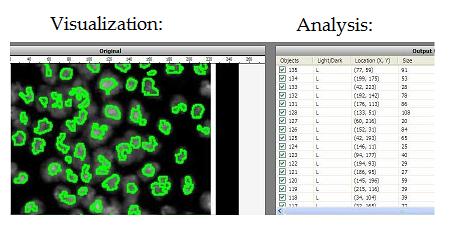

Visualization: image in -> representation out. The idea is that high dimensional image data is transformed into a more primitive representation. Displaying contours of objects is an example of that, illustrated below with Pixcavator. “Pseudocoloring” is an interesting subtopic here even though it can be also classified as image processing.

In conclusion, a couple of quotes from the article. In spite of the disagreement, I am glad that there are people thinking about these issues.

Although it is certainly possible to categorize problems, in a sense each biological study is unique: being based on specific premises and hypotheses to be tested, giving rise to unique image data to be analyzed, and requiring dedicated image analysis methods in order to take full advantage of this data.

It seems to me that there is nothing here that would make these fields/methods/problems limited to biological applications (or medical).

All too often, scientific publications report the use of image analysis tools without specifying which algorithms were involved and how parameters were set, making it very difficult for others to reproduce or compare results.

I think it is the common attitude presented in the first quote that causes this problem. The solution is obvious:

Most of image analysis should be context independent.

In other words, it should be mathematical. Once mathematical issues are understood, image analysis becomes a tool, like a calculator or spreadsheet software.

P.S. I’ll try to rewrite the list and put it in the wiki under Fields related to Computer Vision.

March 23, 2008

I liked this recent blog post 10 Important Differences Between Brains and Computers. The reason is that it gives plentiful evidence in favor of my contention that in designing computer vision systems one shouldn’t try to imitate the human. There are two main reasons. Firstly, computers and brains are very different. Secondly and more importantly, we don’t really know how brains operate!

March 17, 2008

and it’ll go on a killing spree. Amazing video of robotic motion! See how it recovers when kicked (it doesn’t kick back - yet!) and when it slipped on ice. and it’ll go on a killing spree. Amazing video of robotic motion! See how it recovers when kicked (it doesn’t kick back - yet!) and when it slipped on ice.

January 26, 2008

A study came out of MIT a couple of days ago. According to the press release the study “cautions that this apparent success may be misleading because the tests being used are inadvertently stacked in favor of computers”. The image test collections such as Caltech101 make image recognition too easy by, for example, placing the object in the middle of the image.

The titles of the press releases were “Computer vision may not be as good as thought” or similar. I must ask, Who thought that computer vision was good? Who thought that testing image recognition on such a collection proves anything?

A quick look at Caltech101 reveals how extremely limited it is. In the sample images the objects are indeed centered. This means that the photographer gives the computer a hand. Also, there is virtually no background – it’s either all white or very simple, like grass behind the elephant. Now the size of the collection: 101 categories with most having about 50 images. So far this looks too easy.

Let’s look at the pictures now. It turns out that there is another problem elsewhere. The task is in fact too hard! The computer is supposed to see that the side view of a crocodile represents the same object as the front view. How? By training. Suggested number of training images: 1, 3, 5, 10, 15, 20, 30.

The idea “training” (or machine learning) is that you collect as much information about the image as possible and then let the computer sort it out by some sort of clustering. One approach is appropriately called “a bag of words” - patches in images are treated the way Google treats words in text, with no understanding of the content. You can only hope that you have captured the relevant information that will make image recognition possible. Since there is no understanding of what that relevant information is, there is no guarantee.

Then how come some researchers claim that their methods work? Good question. My guess is that by tweaking your algorithm long enough you can make it work with a small collection of images. Also, just looking at color distribution could give you enough information to “categorize” some images – in a very small collection, with very few categories.

My suggestion: try black-and-white images first!

January 4, 2008

Via TechCrunch. Google filed a patent application “Recognizing Text In Images”. It is supposed to improve indexing of internet images and also read text in images from street views, store shelves etc. Since it is related to computer vision, I decided to take a look.

First I looked at the list of claims of the patent. Those are legal statements (a few dozen in this case) that should clearly define what is new in this invention. That’s what would be protected by the patent. The claims seem a bit strange. For example it seems that the “independent” claims (ones that don’t refer to other claims) end with “and performing optical character recognition on the enhanced image”. So, there seems to be no new OCR algorithms here…

According to some comments, the idea is that there is no “OCR platform being able to do images on the level that is suggested here”. It may indeed be about a “platform” because the claims are filled with generalities. Can you patent a “platform”? Basically you put together some well known image processing-manipulation-indexing methods plus some unspecified OCR algorithms and it works! This sounds like a “business method” patent. In fact, in spite of its apparent complexity the patent reminds me of the one-click patent. And what is the point of this patent? Is it supposed to prevent Yahoo or MS from doing the same? Are we supposed to forget that it has been done before?

December 23, 2007

Yesterday TechCrunch announced the finalists of Crunchies - its awards for best start-up companies/product. Twenty categories - all boring! Except for one at the top - Best technology innovation / achievement. The interesting part is that 3 out of 5 finalists have computer vision related products! This seems fair to me but, sadly, there is nothing to celebrate here. Certainly not from the point of view of technology. These are the companies:

Like.com – “likeness” image search for shopping. Searches sometimes make sense but also seem cooked up. Even then the engine is easy to trick: a search for a watch with a secondary dial returned many watches without. Bottom line, nothing new after a whole year.

Earthmine – reconstructing cities from street views, “first geospatially accurate and complete street-level 3D data”. Well, conversion of 2D to 3D isn’t going to work. If they collect images continuously by driving through the streets and then patching the images together (that’s unclear), they get a 3rd dimension. Bottom line, even their demo shows only static (panoramic) shots not a true 3D reconstruction.

Viewdle – face recognition in videos. Makes a good demo but there is no way to test it. Can such technology ever be reliable? Once again it’s the 2D-to-3D issue. Bottom line, how is it better than Polar Rose?

None gets my vote.

December 9, 2007

For a while I’ve been planning to resume reviews of image search applications but couldn’t decide which one to start with. The decision was made for me – coverage of Polar Rose (last reviewed in March) appeared. Was it in TechCrunch? No, it appeared in Time Magazine!

They should be embarrassed.

Let’s start with this quote at the top of the page in large letters: “these Tech Pioneers show that the best technology is often just a new way of thinking about an old problem” (bold face theirs). So you don’t need expertise or hard work, all you need is to be original. A feel-good idea for little kids…

Now about the article itself. It occupies just a half-page but there is also a whole page picture on the front page of the series “Tech Pioneers”. As far as image search is concerned the article repeats the old promises of the founder: “click on a photo to search for other photos”, “turns photographs into 3-D images”. A new promise is this: plug-in for FF is given away and the one for IE coming soon. OK fine, let’s go to the site, download and test it. Imagine my surprise when I discovered that there is only beta testing going on! The testing is closed and there is no way to try it. How come it’s not available? “It’s a very slow business…”, according to the founder.

My guess is that the reporter didn’t try it either. Apparently, the whole thing came to Time from the World Economic Forum. Here Polar Rose promises to launch face matching “later this year”. We’ll see…

November 2, 2007

Recently I read this press release: computers get “common sense” to tell a tennis ball from a lemon, right! I quickly dismissed it as another over-optimistic report about a project that will go nowhere. Then I read a short comment (by ivankirgin) here about it.

Common sense reasoning is one of the hardest parts of AI. I don’t think top-down solutions will work. …You can’t build a top down taxonomy of ideas and expect everything too work. You can’t just “hard code” the ideas.

I can’t agree more. But then he continues:

I think building tools from the ground up, with increasingly complicated and capable recognition and modeling, might work. For example, a visual object class recognition suite that first learned faces, phones, cars, etc. and eventually moved on to be able to recognize everything in a scene, might be able to automatically perhaps with some training build up the taxonomy for common sense.

First, he simply does not go far enough. I’d start with even lower level – find the “objects”, their locations, sizes, shapes, etc. This is the “dumb” approach I’ve been suggesting in this blog and the wiki. Once you’ve got those abstract objects, you can try to figure out what those objects represent. In fact, often you don’t need even that. For example, for a home security system you don’t need to detect faces to sound alarm. The right range of sizes will do. A moving object larger than a dog and smaller than a car will trigger the alarm. All you need is a couple of sliders to set it up. Maybe the problem with this approach is that it’s too cheap?

Another point was about training and machine learning. I have big doubts about the whole thing. It is very much like trying to imitate the brain (or something else we observe in nature). Imagine you have a task the people do easily but you don’t understand how they do it. Now you solve the problem in these three easy steps.

- You set up a program that supposedly behaves like the human brain (something you don’t really understand),

- you teach it how to do the task by providing nothing but feedback (because you don’t understand how it’s done),

- the program runs pattern recognition and solves the problem for you.

Nice! “Set it and forget it!” (Here is another example of this approach.) If you can teach computer to recognize objects, you can teach it simpler things. How about teaching computer to add based entirely on feedback? Well, this topic deserves a separate post…

— Next Page » |

|

|

and it’ll go on a killing spree. Amazing

and it’ll go on a killing spree. Amazing