April 29, 2008

A paper appeared recently on how to improve Google search. It has received a lot of media coverage including NY Times and TechCrunch. Since this is a topic that interests me a lot, I decided to write a few words.

The most important thing to understand here is that the paper isn’t about improving image search in general (especially visual image search and CBIR, see here). It is specifically about Google image search (and indirectly other search engines, MSN, Yahoo, etc). The goal is to improve it (because it sucks). It is currently based on surrounding text and as a result you get a lot of irrelevant images. Essentially, they add to this approach some image analysis. What kind? Not the best kind – “descriptors”. So there will be no analysis of the content of the image (see Fields related to computer vision). Even so, the descriptors will help to evaluate similarity between images - to a certain degree.

To summarize, some similarity measure plus hyperlinks - that will help with improving the search results for sure. Meanwhile, image search, image recognition etc remain unsolved.

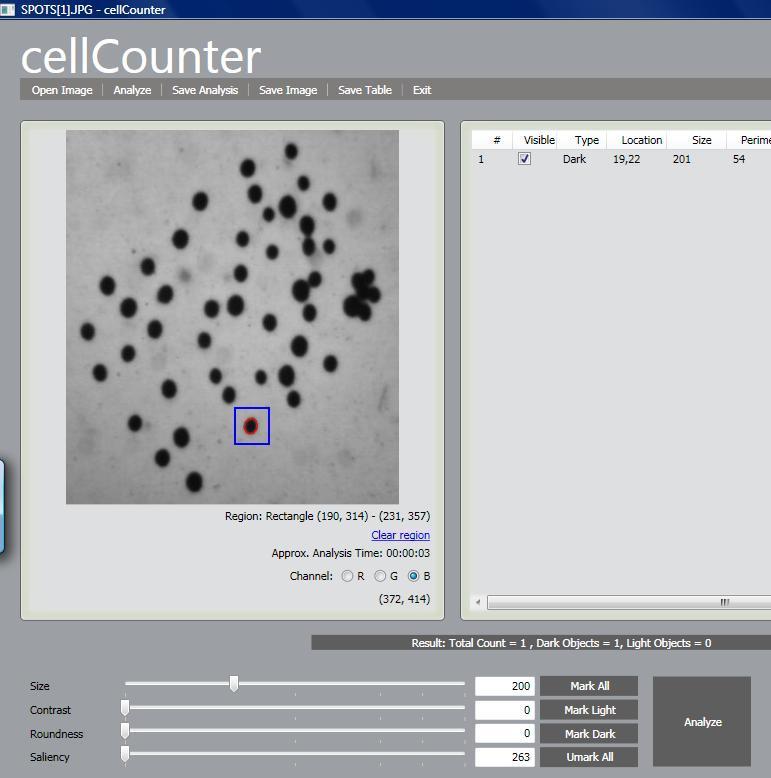

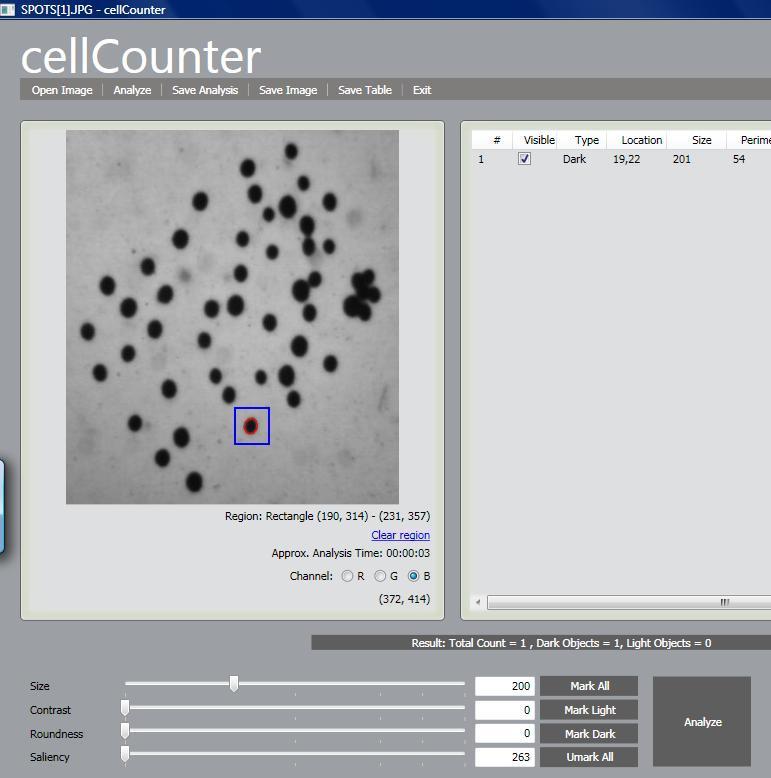

The next release of cellAnalyst due in May will have one especially nice feature. The hardest part new users find about getting the best from cellAnalyst (and Pixcavator) is discovering good analysis settings - size, contrast etc. Trial and error takes too long, expecially for larger images. Now the user will have the ability to analyze a small rectangle to find the measurements of objects - cells - he is interested in and then apply these settings for the analysis of the whole image. In more detail, this is described in the wiki under Analysis Strategy.

April 26, 2008

I was reading this interview with Donald Knuth and “literate programming” was mentioned. I went to their site and this is what explained to me what it’s about:

Instead of writing code containing documentation, the literate programmer writes documentation containing code.

Suddenly I realized: that’s it! That’s what I’ve been trying to do in the wiki. It should be text illustrated with code not vice versa. Interesting…

April 19, 2008

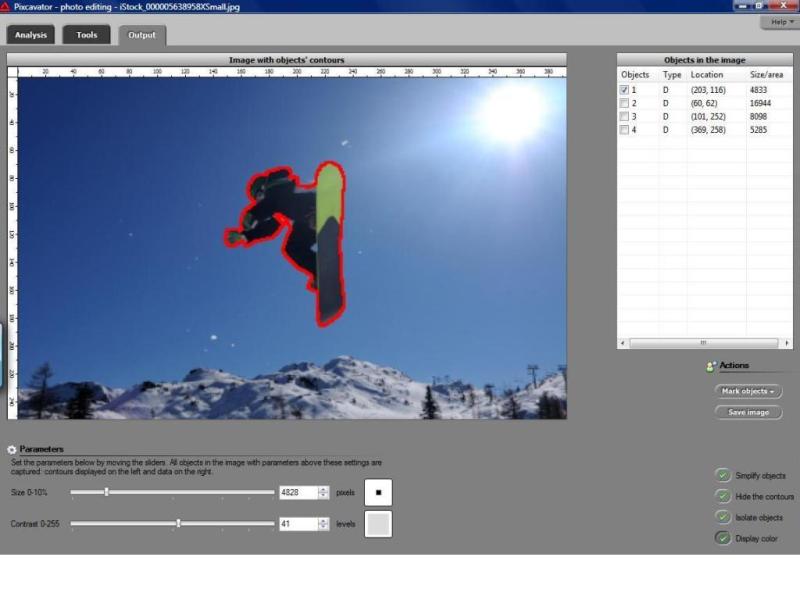

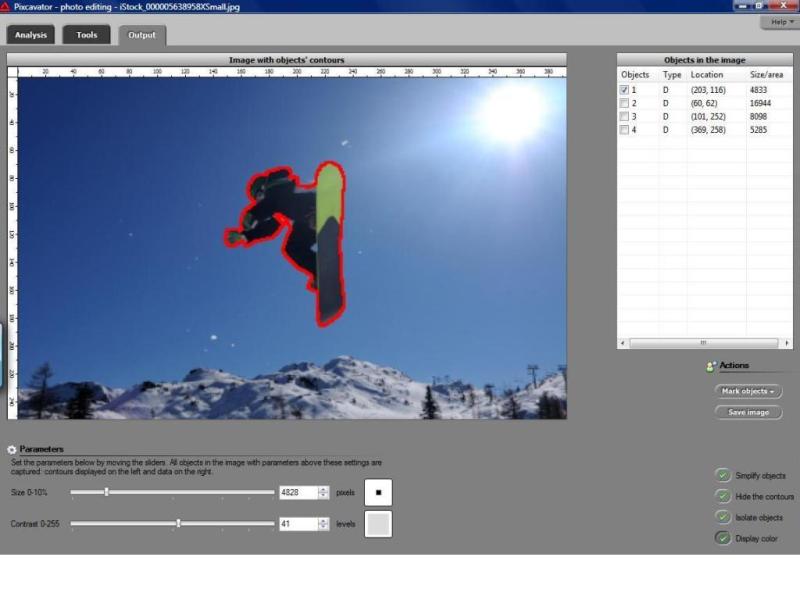

It is built on the same platform as the standard – image analysis – version of Pixcavator. The roundness and saliency sliders are removed. The output table is reduced to location and sizes only. The features that the standard version does not have are: It is built on the same platform as the standard – image analysis – version of Pixcavator. The roundness and saliency sliders are removed. The output table is reduced to location and sizes only. The features that the standard version does not have are:

- Automatic simplification – smaller or low contrast objects are removed throughout, in all three channels. This is truly image simplification as finer details disappear.

- Manual simplification – a selected contour is filled with the color surrounding it. In other words, this is spot cleaning (in the example below the mole was removed with a single click - nothing against it though). The feature was available before but not in color.

- Isolating objects – everything but the selected objects is painted white. In other words, this is background removal.

For more examples, see the site.

More on the topic will appear in the wiki under Image manipulation.

April 13, 2008

Guest post by Ash Pahwa.

CellProfiler was developed at Whitehead Institute for Biomedical Research and MIT by Anne Carpenter’s group. This software is designed for mass processing of bio-medical images. The main focus of the software is to identify and quantify cell phenotypes. I tested CellProfiler version number 1.0.4628 published on April 23, 2007. It is an open source software that also works with Open Microscopy Environment (OME). The software is an interface tool for MATLAB and it comes with a runtime version of MATLAB.

The unique feature of CellProfiler is that it allows mass processing of images. User specifies two items. First, the folder name where the images are stored. And second, a list of operations that need to be performed on the images. This list of operations is called a “pipeline”. Once both items are specified, the processing starts and all the operations specified in the pipeline are performed on all the images. The result of all the operation can be exported to a database or Excel spreadsheet. However, CellProfiler does not have such database management capabilities as cellAnalyst.

The standard operations are as follows:

- Cell counting,

- Cell size,

- Cell identification,

- Per-cell protein levels,

- Cell shape,

- Sub-cellular levels of DNA,

- Protein staining.

Besides these features CellProfiler also provides many other advanced image processing tools used primarily with the biomedical images. Since CellProfiler is an interface from the image processing tool box of MATLAB (IPT), all the IPT functions can also be applied to the images; for example, special filters, histogram equalization, frequency filters etc. User has the ability to write his own MATLAB scripts for custom image processing tasks. The primary market of this product is a place where images are being captured in high quantities. Individual image analysis is not possible in these conditions. In these circumstances CellProfiler can used to analyze the images while saving time as well as improving accuracy of the analysis.

April 7, 2008

I kept thinking about the issue of image analysis vs. computer vision. This is how it was interpreted in the article:

- Image Analysis: image in -> features out.

- Computer Vision: image in -> interpretation out.

The problem I had with this approach comes from this example: even though computing the distribution of colors in the image is analysis, it does not tell anything about the contents of the image. My take was:

- Low level image analysis = image processing.

- High level image analysis = low level computer vision.

- High level computer vision = image understanding.

I want now to clarify this idea. The difference between low level analysis and high level analysis is that the latter reveal the content of the image – possibly on a low level. But how? My answer is:

Low level analysis is local and high level analysis is global.

There is a simple test for that:

The analysis is local when cutting the image into pieces and reassembling them in an arbitrary way does not affect the results.

You can even imagine that you arrange the pixels in a single row. You can analyze those pixels all you want but they can’t reveal the content of the picture! Here are some examples.

Local analysis:

- anything based on color/intensity histogram,

- statistics (mean, standard deviation, etc);

- anything based on local filtering, in particular edge detection.

Global analysis:

- Image segmentation;

- Fourier analysis;

- texture and pattern;

- morphological analysis (but only if the output is still image segmentation).

It is interesting that in ImageJ’s Features page, we find no mention of image segmentation:

Analysis:

- Measure area, mean, standard deviation, min and max of selection or entire image.

- Measure lengths and angles.

- Use real world measurement units such as millimeters.

- Calibrate using density standards.

- Generate histograms and profile plots.

The only global item on that list is #2. And one still needs to find something to measure – it would have to come from image segmentation.

In visual image search (CBIR) image analysis is typically local: color distribution, edge distribution, other “descriptors”. Studying patches instead of pixels is still local if you measure the patches in pixels (filtering, morphology). But suppose you cut the image into 100 patches and then collect global information from each patch. Rearranging these patches will unlikely to produce a real life image. Lincoln from MS Research and some others operate this way.

To summarize,

High level analysis = global analysis = low level computer vision.

April 1, 2008

I decided to rename the wiki, from “Computer Vision Wiki” to “Computer Vision Primer”. “Wiki” is just such a general (and generic) term that it is easy to confuse our wiki with other wikis related to computer vision. The word “primer” helps to make a point about what makes ours different – we focus on the fundamentals and try to keep it very accessible. CVprimer.com is also easy to remember. Finally, when I get to turn this into a book, Computer Vision Primer will be a good title.

There have been some additions to the wiki. I did quite a lot of editing throughout, for example, Overview. I started to add articles on measurements of objects: saliency, mass, average contrast, diameter, minor and major axes, Euler number, Robustness of geometry and topology. Those are still quite thin. In article Machine learning in computer vision I summarized the recent blog posts on the subject. There are also many red links – those are articles I plan to write.

Pixcavator PE (photo editing) is to be released in just a few weeks. It is a simplified version of the image analysis version but it will also have a couple of new features. These features and many more will appear in Pixcavator 2.5.

cellAnalyst has now an online counterpart. You can upload your images, analyze them, save the data, and search images - all in your browser. Create your free account here. Feedback will be appreciated… Meanwhile, AssaySoft has been incorporated.

|

|

|

It is built on the same platform as the standard – image analysis – version of Pixcavator. The roundness and saliency sliders are removed. The output table is reduced to location and sizes only. The features that the standard version does not have are:

It is built on the same platform as the standard – image analysis – version of Pixcavator. The roundness and saliency sliders are removed. The output table is reduced to location and sizes only. The features that the standard version does not have are: