This page is a part of the Computer Vision Wiki. The wiki is devoted to computer vision, especially low level computer vision, digital image analysis, and applications. The exposition is geared towards software developers, especially beginners. The wiki contains discussions, mathematics, algorithms, code snippets, source code, and compiled software. Everybody is welcome to contribute with this in mind - all links are no-follow.

Image Simplification

From Computer Vision Wiki

Start with Binary Images.

Size based simplification

The partition of the image makes it possible to simplify the image by removing some features, i.e., cycles. The approach is as follows. The user is given an option to keep in or extract from the image the features the characteristics of which lie within the limits chosen by him. Meanwhile, if all limits are set to zero, the visualization of the output produces the original image.

The size-based simplification means removal of features of “small” size. Since a feature is represented by a cycle as a sequence of edges, by “size”, we understand the area of the interior of the cycle. Then small black and/or small white regions are removed. More generally, the size can be the integral of any continuous function of two variables over this region. This characteristic is updated at every step of the algorithm and recorded in the nodes of the graph. From the current frame, a feature is removed if its size is less than a given level. Of course, sometimes it is the small features that interest us. Example is minutiae in fingerprint identification.

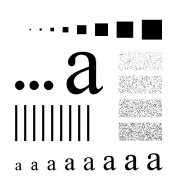

Figure. This is an example of the removal of features from a binary 500×500 image based on their size. The largest area of the features removed for (a)-(d) is 0 (the original), 100, 300, and 1000 pixels, respectively.

Several different kinds of “sizes” may be used simultaneously. For example, to remove a scratch from a photograph choose area/perimeter < .5. Similar settings would also help to identify and extract roads from high resolution satellite images or ridges from noisy fingerprint images.

Exercise What restrictions on the area and the perimeter should one choose to separate squares from circles?

Other characteristics of the objects can be computed as line integrals. Those include but are not limited to perimeters, moments (and, therefore, centroids), curvatures, etc. These are the area integrals of a function f(x,y) over the exterior boundary of the cycle. For the sake of meaningful simplification, the function f(x,y) should be chosen non-negative. This will ensure that the removal of a component would also imply the removal of all of its holes, components located in these holes, etc.

After the run through the image it is simplified. Below we assess cycles by considering their areas and perimeters. Here, the minimal area, minArea, and the minimal length, minPerimeter, are the same for all cycles independent of dimension or location. These are the main simplification settings.

unsigned long minArea = 0; // min area of the cycle, selected by the user

unsigned long minPerimeter = 0; // min length of the cycle

int Evaluation( struct Cycle *Cycle0 )

{

if( Cycle0->Area < minArea )

return(0); // inactive

if( Cycle0->Perimeter < minPerimeter )

return(0); // inactive

return(1); // active

}

To visualize the simplified image, active 0-cycles are filled with black while active 1-cycles are filled with white, as follows. One moves along the row until an active edge is crossed. Then, until another active edge is reached, the pixels are drawn black. After next active edge, the pixels are white, until another active edge is reached, etc. Since an inactive feature is bounded by inactive edges, it will be missing from the output as it adopts the color of the surrounding area.

bool *SimpFrame = new bool [ dim1*dim2 ];

void Simplify()

{

// simplification of the image

int edge[4], back[4] bool color

for(int k=0;k<dim1k++)

for(int l=0;l<dim2l++)

{ if( l == 0 ) color = 0; // each row starts with white

edge[0] = k; edge[1] = l; edge[2] = 1; edge[3] = 0; // vertical edge

Reverse( edge, back );

Cycle1 = GetCycle( edge ); // edge

Cycle2 = GetCycle( back ); // .. and back

if( Evaluation( Cycle1 ) == 1 || Evaluation( Cycle2 ) == 1 ) // if active

if( color == 1 ) color = 0; else color = 1; // ..switch color

SimpFrame[l+dim2*k] = color; // simplified frame

}

}

Exercise Add to the program the simplification procedure and the ability to choose the simplification settings. The additional output should be the simplified image and its Betti numbers. Verify the correctness of these numbers.

Exercise Add the option of simplifying the image based on the combination of area and perimeter (such as their ratio). Experiment with the program. What kinds of objects are you able to remove?

Exercise If a white object, 1-cycle, touches the border it is not counted. Modify the algorithm so that 1-cycles that touch the border of the image are included.

In case of gray scale images, the procedure is similar.

Simplification is carried out in the end of processing of each frame. To visualize the gray scale image, active 0-cycles are filled with the current gray level while the active 1-cycles are filled with white, as follows. Starting with gray level 0, a recoloring procedure is carried out for each level of gray from 0 (black) to 255 (white). Given a gray level, one changes the colors of some pixels in each row of the image to this new color. One moves along the row until an active edge is crossed. Then, until another active edge is reached, the pixels are redrawn using this gray level. After next active edge, the recoloring stops, until another active edge is reached, etc. Since an inactive feature is bounded by inactive edges, it will be missing from the output as it adopts the color of the surrounding area.

void Simplify(int frame) // simplification of the frame

{ int k,l; int edge[4], back[4];

for( k=0; k<dim1; k++ )

for( l=0; l<dim2; l++ )

{ if( l == 0 ) color = 0; // each row starts with white

edge[0] = k; edge[1] = l; edge[2] =1; edge[3] = 0; // vertical edge

Reverse( edge, back );

Cycle1 = GetCycle( edge ); // edge

Cycle2 = GetCycle( back ); // .. and back

if( Evaluation( Cycle1 ) == 1 || Evaluation( Cycle2 ) == 1 ) // if active

if( color == 1 ) color = 0; else color = 1; // ..switch color

SimpFrame[l+dim2*k] = color; // simplified frame

}

// creating of the simplified of the image

for( k=0; k<dim1; k++ ) // create a simplified image

for( l=0; l<dim2; l++ )

{

// if the pixel was changed from black to white

if( CurrFrame[l+dim2*k] == 1 && SimpFrame[l+dim2*k] == 0 )

imageS[l+dim2*k] = frame; // opposite

if( CurrFrame[l+dim2*k] == 0 && SimpFrame[l+dim2*k] == 1 )

imageS[l+dim2*k] = frame-1;

}

// rewrite the frame

for( k=0; k<dim1; k++ )

for( l=0; l<dim2; l++ )

PrevFrame[l+dim2*k] = CurrFrame[l+dim2*k];

}

The contrast based simplification of images

Start with Grayscale Images.

The output of the algorithm after all frames have been processed is the collection of all current cycles.

An important characteristic of a cycle is its “lifespan”. A component emerges at frame B (birth) and merges with the surrounding area at frame D=255 (death); or a hole emerges in an object at frame B and is filled at frame D. The total lifespan is D - B. In case of a grays scale image, this is simply the contrast of the feature. Therefore, the result of the simplification is that the features with the contrast below a given threshold T are removed below. It is especially important that features of high contrast are preserved and features of low contrast are removed regardless of their gray levels.

Suppose we want to remove all cycles with contrast below a given level, T. Then the first T frames of the image sequence will be all white. The result is that the simplified image looks pale. This is the effect of “mountaintop cutting”. Therefore, in order to visualize the simplified image better, the gray levels of pixels may have to be reassigned as a post-processing step. A natural choice is the following piecewise linear function. If the gray level L is less than T it is replaced with 0 (black), if L is greater than 255-T it is replaced with 255 (white), for the rest L is replaced with 255(L-T)/(255-2T). This is just one of many methods of “histogram stretching”.

An example of the removal of features from a gray scale image based on contrast. The contrast of the features removed for (a)-(d) is less than 5, 50, 75, 127, respectively. Below, the contrast settings were chosen 40 and 80.

Exercise Suppose we simplify the image below under contrast based simplification by increasing the contrast threshold? What object will disappear first, last?

The flip side of image simplification is feature extraction. If objects, such as a cancer tumor, blood cells, can be quantitatively described, the algorithm will isolate them from the rest of the image. The objects can be extracted as binary regions, contours, or gray scale images.

Examples

The size-based simplification: the features of “small” size are removed from each frame. Since a feature is represented by a cycle as a sequence of edges, by “size” we understand the area of the interior of the cycle, just as before. Then small black and/or small white regions are removed. More generally, the size can be the integral of any continuous function of two variables over this region or a line integral along the cycle representing the region. These characteristics may be updated at every step of the algorithm and recorded in the nodes of the graph. From the current frame, a feature is removed if its size is less than a given level.

Below is an example of the removal of features from a gray scale image based on size. The largest area of the features removed for (a)-(d) is 0 (the original), 100, 300, 500, respectively.

Several different kinds of “sizes” may be used simultaneously. For example, to remove a scratch from a photograph choose area/perimeter < .5. Below (a) is the original and (b) is the image with all the features with area/perimeter < .5 removed.

Exercise What settings would help to identify and extract roads from satellite images? What about ridges from fingerprint images?

The output of the algorithm after each frame is the collection of all current cycles. Next, the Evaluation command either accepts or rejects each of these cycles. This command rejects the current cycle if its numerical parameters are different from (especially, lower than) the ones set by the user. The remaining cycles and their edges are called active. The current cycles with low area are marked as inactive and not recorded into the graph of the simplified image.

The simplification can be localized to an area chosen by the user. For example, the area to be simplified is marked with a region selection tool or an airbrush. Then the function f(x,y) is chosen small in this area and large outside. As a result, the features outside this area are not affected by the chosen size restrictions. Alternatively, the area outside the chosen region is completely ignored.

Exercise Implement the “localization” of processing outlined above.

During processing, the areas, perimeters, and contrasts of the objects are computed. The image simplification settings are chosen based on a priori knowledge about the image. For example the image with coins is 300×246. To count the coins and ignore the small features depicted on the coins, one sets the limit of 1000 pixels for the area. The algorithm produces the simplified image on the right and the output “10 light objects”.

The images illustrate how the objects may be captured without segmentation. An object can also be “extracted” from the image be removing all other objects.

In the next image a breast cancer tumor was isolated by setting the limit on contrast equal to 70 and setting an additional restriction on “roundness” of objects. The result is the simplified image on the right and the output “1 dark object and 1 light object”.

In the next image, noise was added to the 512×512 image of 'Lena', and then the image was denoised by choosing the limits of 20 for the area and 20 for the contrast. Observe that this approach to denoising leaves boundaries intact.

Exercise 1 What is a reasonable way to combine the contrast and size (sizes) of an object as a measure of its importance?

Exercise 2 Add to the program the simplification procedure and the ability to choose the simplification settings. The additional output should be the simplified image and its Betti numbers. Verify the correctness of these numbers.

Exercise 3 Add the option of simplifying the image based on the combination of area, perimeter, and contrast. Experiment with the program.

Top finds

- Casinos Not On Gamstop

- Non Gamstop Casinos

- Casino Not On Gamstop

- Casino Not On Gamstop

- Non Gamstop Casinos UK

- Casino Sites Not On Gamstop

- Siti Non Aams

- Casino Online Non Aams

- Non Gamstop Casinos UK

- UK Casino Not On Gamstop

- Non Gamstop Casino UK

- UK Casinos Not On Gamstop

- UK Casino Not On Gamstop

- Non Gamstop Casino UK

- Non Gamstop Casinos

- Non Gamstop Casino Sites UK

- Best Non Gamstop Casinos

- Casino Sites Not On Gamstop

- Casino En Ligne Fiable

- UK Online Casinos Not On Gamstop

- Online Betting Sites UK

- Meilleur Site Casino En Ligne

- Migliori Casino Non Aams

- Best Non Gamstop Casino

- Crypto Casinos

- Casino En Ligne Belgique Liste

- Meilleur Site Casino En Ligne Belgique

- Bookmaker Non Aams

- カジノ ライブ

- онлайн казино с хорошей отдачей

- スマホ カジノ 稼ぐ

- ブック メーカー オッズ

- Top 3 Nhà Cái Uy Tín Nhất

- Trang Web Cá độ Bóng đá Của Việt Nam

- Casino En Ligne Avis

- Casino En Ligne France

- Casino En Ligne

- 꽁머니 토토

- Casino Online Non Aams